Research and Innovation – Italian excellence beats Hi-Tech giants

Across Italy and Europe, top-tier research is being conducted both in universities and in centers of excellence. A prime example comes from Trento: Fondazione Bruno Kessler (FBK), and specifically the Technologies of Vision (TeV) Unit, recently secured first place in the prestigious international BOP Benchmark for 6D Object Pose Estimation Challenge 2024. This competition focuses on image recognition algorithms that do not rely on a training phase with the FreeZe v2 method.

This achievement is remarkable: the FBK team outperformed 50 global competitors, including heavyweight players like NVIDIA, Meta, and Naver Labs.

It’s proof that world-class innovation can emerge even outside major metropolitan areas—what’s needed is talent, vision, and strategic investment.

Today it’s cutting-edge research; tomorrow it could translate into real-world applications: in space technologies, satellite systems, security, infrastructure maintenance, and advanced manufacturing. The next challenge is technology transfer—this requires policies that link research outcomes with industry, support the creation of a thriving entrepreneurial ecosystem, and provide the necessary funding.

LANGUAGE, VISION AND ROBOTICS: THE PROJECT THAT AIMS TO MAKE THE ROBOT UNDERSTAND WHAT TO DO, HOW AND WHERE

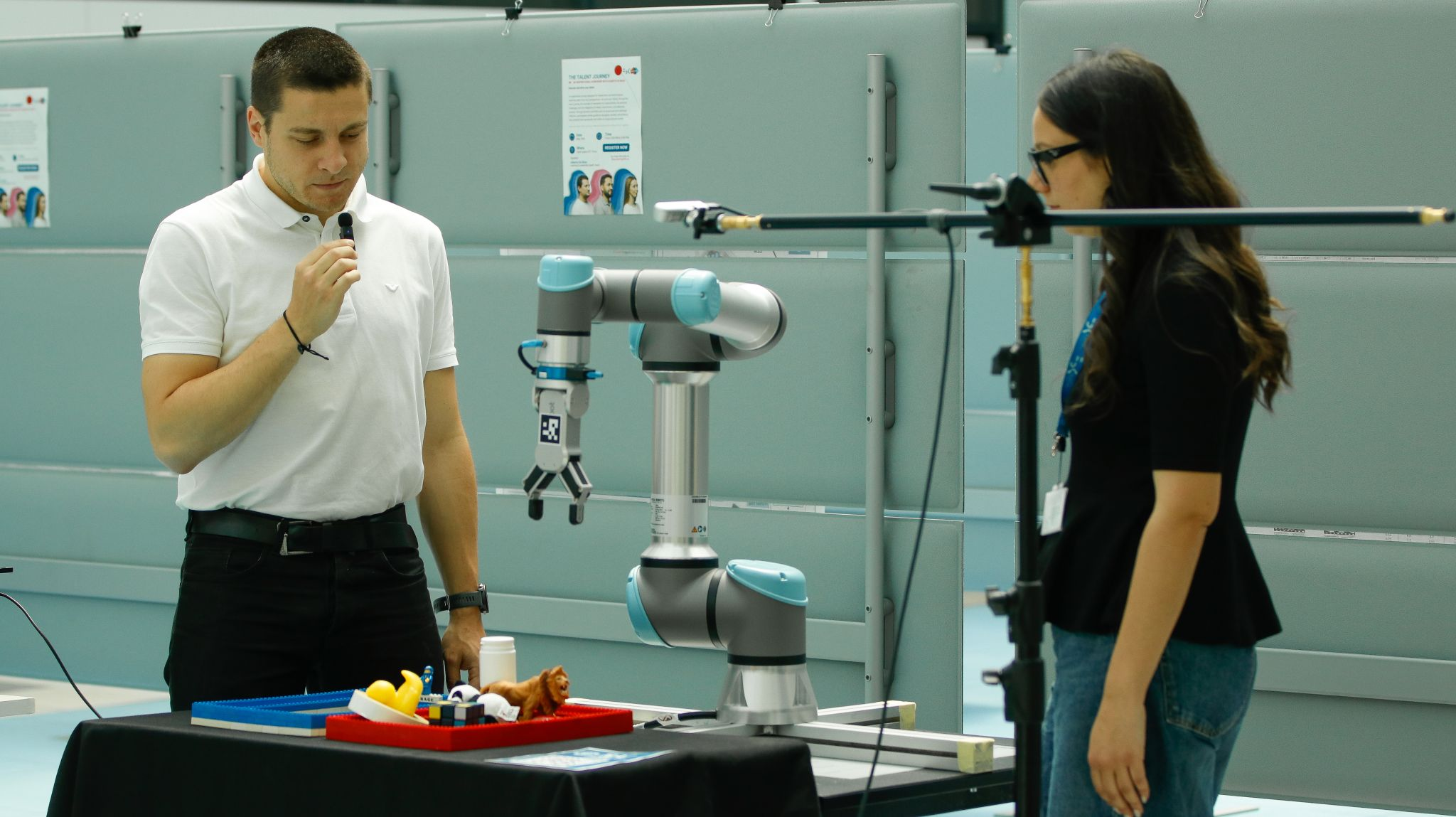

The Technologies of Vision Unit at FBK is also pioneering a highly innovative project that merges natural language processing, machine vision, and robotic manipulation. The goal is ambitious: to enable a robot to interpret written or spoken commands, understand the visual environment in front of it, and take appropriate action autonomously and reliably. The focus lies on robotic manipulation—specifically, a robot’s ability to interact physically with objects: to grasp, move, or avoid them.

A major technical hurdle is developing a form of “spatial reasoning” in the robot—for example, recognizing whether an object is directly accessible or whether others must first be moved in a cluttered scene. The project uses advanced language models like GPT-4, combined with internally developed algorithms designed to address gaps in spatial understanding—a known limitation of current large language models. To further enhance these abilities, the team is also exploring ways to generate targeted training data.

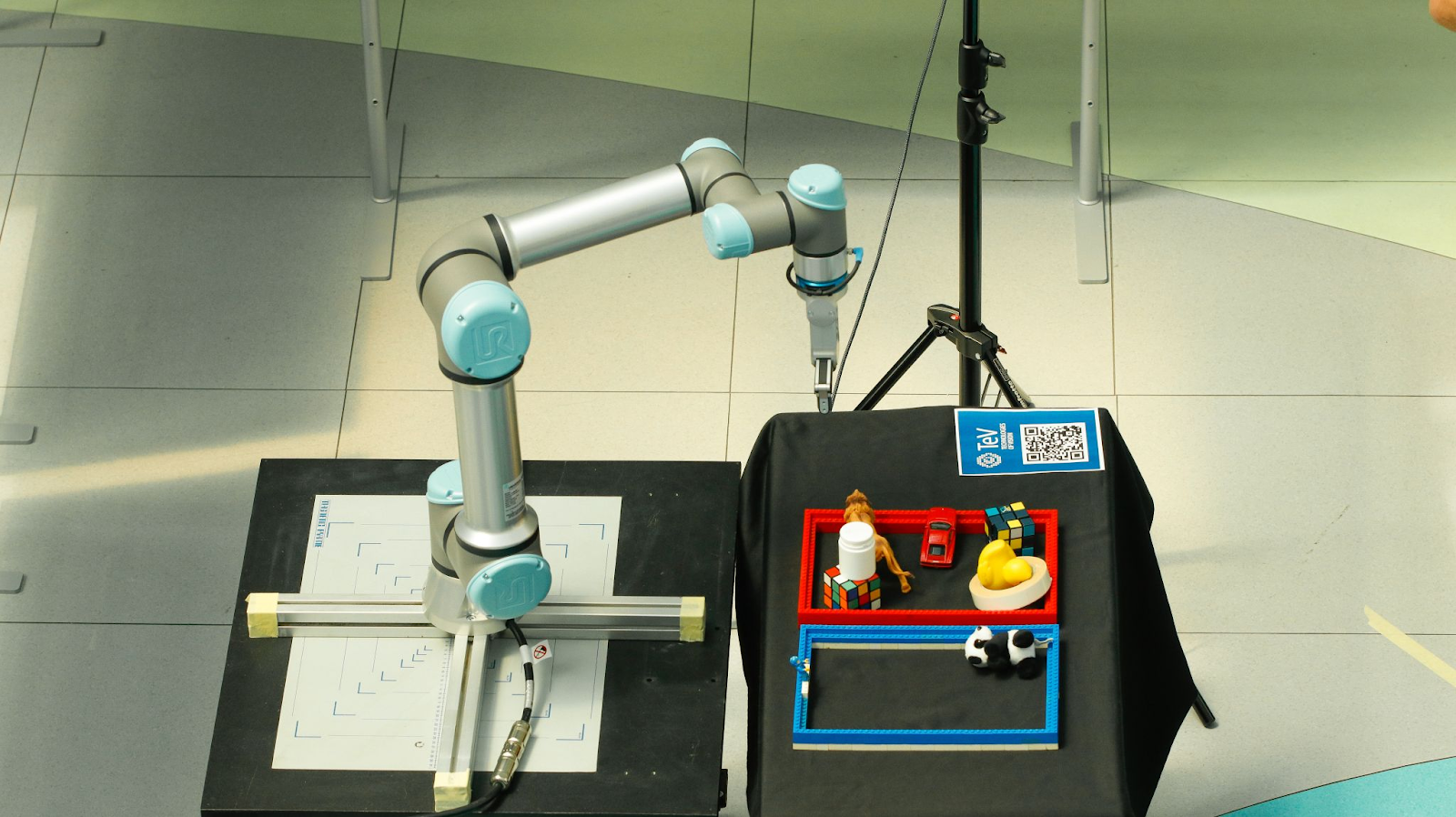

The system utilizes a 3D camera capable of capturing both color and depth information using a point cloud representation. A QR code placed on the robotic arm enables calibration between the camera and the arm’s reference system. The process operates as follows: the user provides an image of the scene accompanied by a command (e.g., ‘pick up the cup to the left of the bottle’); the model parses the input and outputs the optimal grasp location. The robot, using a reverse kinematics system, then executes the action, avoiding collisions with itself, the camera, or the surrounding environment.

In leading laboratories like FBK’s, work is advancing toward a unified Visual-Language-Action Model—a single neural network that seamlessly integrates vision, language, and action. High-precision industrial cameras (with 0.2 mm accuracy and costing over €15,000) are already being used to test the system in complex environments.

The first public paper on this work has already been released, and FBK’s robotics lab is now one of the pioneers in Italy integrating natural language, computer vision, and robotic manipulation, a field with strong potential for industrial and scientific collaboration.

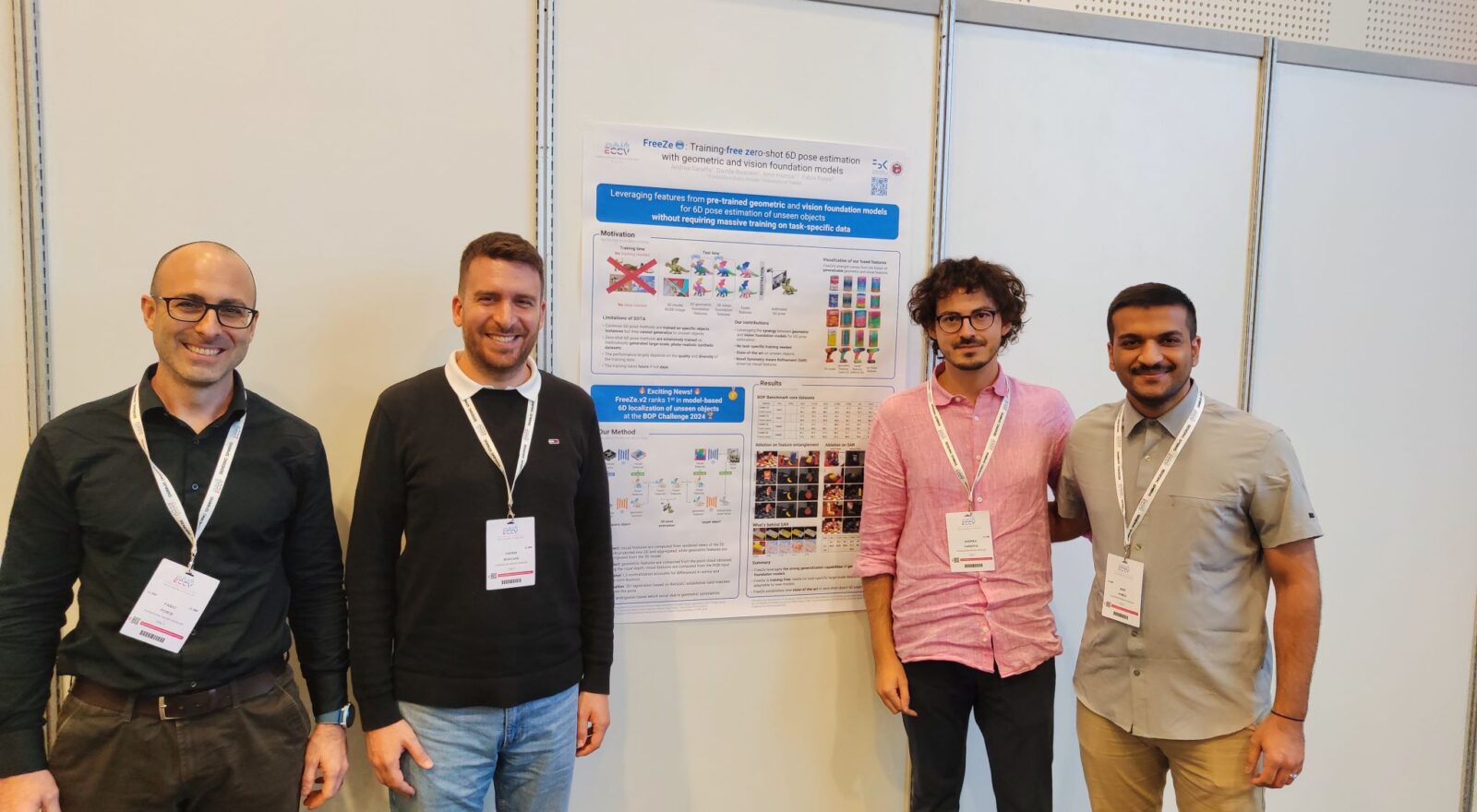

The cover image features the FBK team: Fabio Poiesi, Davide Boscaini, Andrea Caraffa, and Amir Hamza.