Algorithms against fake news

Artificial intelligence as a valuable ally for press and fact-checkers in promoting truthful and accurate information

The first weekend of May 2023, Rovereto came alive with Wired Next Fest 2023, and one of the talks, entitled “Algorithms against fake news”, was hosted in the beautiful setting of Palazzo del Bene. Riccardo Gallotti, head of the CHuB lab research unit at FBK’s Digital Society Center, and Chiara Zanchi, researcher in Glottology and Linguistics at the University of Pavia, took turns on stage.

The discussion started from the concept of lack of awareness, i.e., that both in the case of fake news and in the case of the telling of violent events such as feminicides or traffic accidents, it is not always a matter of the journalist’s or the person who spreads the information wanting to do it in a harmful and inappropriate way, in order to receive more clicks, but just of errors of judgment.

CChiara Zanchi introduced the topic by providing some linguistic examples that make it clear how the choice of a specific word or syntactic construct determines the perception of an event.

For example, the sentence “The murderous rampage broke out” paints an event caused by something external, as is the case with a thunderstorm, when in fact there is a direct perpetrator of that murderous rampage.

Similarly, reading “In the past 6 months, violence has escalated,” the subject is de-emphasized and there is a perception of a spontaneous event, which does not depend on the will of the individual.

In addition to syntactic constructions, words too carry weight. A “tragedy” is an event that includes one or more participants who die (e.g., earthquake, flood); if, on the other hand, I speak of killing, the term takes in an entity that kills and one that is killed.

It is therefore instantly clear that a given narrative of a fact translates into a different perception of it in terms of the attribution of responsibility and the roles played by those involved. We are not dealing in this case with fake news, but with a sometimes unaware narrative that may, however, raise erroneous perceptions in the receiving public.

Regarding the now very common term fake news, Riccardo Gallotti was quick to point out that it is actually a very generic and undoubtedly overused term, referring to both something slightly bad and something very serious. Moreover, it is a passive term that does not show the malicious intent of those who spread untruthful news. Better, therefore, to speak of misinformation or disinformation.

Disinformation is intentional (knowingly spreading false news), or one can pass on information thinking it is true when it is not (misinformation).

Also, we should not underestimate the manipulative effects accentuated by bots amplifying the signal or stimulating particular groups, or trolls activating messages and spreading them for a fee.

And this is where Artificial Intelligence comes in, with one common purpose: to help human beings convey correct information.

As part of the “Responsibility framing” project, carried out together with the Universities of Amsterdam, Groningen and Turin, Chiara Zanchi analyzed a corpus of articles from the local and national Italian press on feminicide, noting how syntax and choice of terminology considerably influence the perception of the roles of victim and perpetrator, depending, for example, on whether an active construct (“the man killed the woman) rather than a passive one (“the woman was killed”) is used, or by shifting the focus from the protagonists (victim and murderer) to the incident (the event or fact). With the help of a team of computational linguists, a perception survey with questions regarding focus (who/what is being highlighted?), cause (emotion or human being?) and attribution of responsibility (blame) was then developed and administered to 200 people. The results confirmed that specific syntactic or lexical choices do indeed determine different perceptions of blame and responsibility for a fact. From here, an AI algorithm was created that can quantitatively define the score with regard to focus, blame, and reason, which can become a valuable ally for journalists, for example.

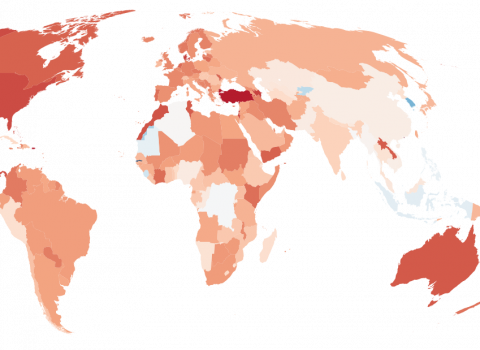

In contrast, the three-year European project AI4TRUST, coordinated by Fondazione Bruno Kessler and in collaboration with 17 partners, aims to combat misinformation and disinformation within the European Union by creating a reliable environment that integrates automated social media and media monitoring with advanced artificial intelligence-based technologies to improve the work of human fact-checkers. Nowadays, in fact, disinformation runs too fast on the web, especially on social media and particularly disinformation related to health and climate change, and the amount of content is so big that it is impossible for humans alone to check it in a timely manner.

What is needed, therefore, is a hybrid artificial intelligence-based system, i.e. one that supports the work of humans to combat disinformation, which is precisely what the AI4TRUST project is set out to do. The system will monitor various social media and information sources in near real-time, using the latest artificial intelligence algorithms to analyze text, audio and video in seven different languages. It will, thus, be able to select content with a high risk of disinformation (e.g.: previously used, old image, or highly emotional content text) so as to flag them for review to professional fact-checkers, whose input will provide additional information for improving the algorithms themselves.

The idea for the project germinated in the early stages of the Covid-19 pandemic, when FBK’s DIGIS center began tracking the virus-related infodemic; however, the platform that was developed, considered only texts and tracked disinformation only by analyzing links. Therefore, if a news item came from a traditionally reliable news outlet, it was classified as truthful, which, however, cannot be guaranteed in all circumstances.

Therefore, the need was felt for a broader project that would also analyze other sources.

But in all this, what role does the human being play? Where do journalists and fact-checkers fit in this scenario?

Actually, the human being is essential to the development and research of these new, potential tools. In the case of Chiara Zanchi’s project, the native speakers who took the survey were of paramount importance, and in any case the last word will always lie with the human professional who then packages the information. The purpose, then, is only to provide a useful tool to gain awareness of the linguistic choices one makes when writing a news text, in light of how the way one tells the story influences the perception of the social community.

Similarly, the AI4TRUST project is also based on the concept of human in the loop: in fact, the research involves journalists and fact-checkers who benefit from the input of AI computer technicians to verify the veracity of an image, text, or video. The ultimate goal is to create a virtuous circle in which AI works alongside fact-checkers to promote accurate information.