Explainable AI for healthier lifestyles

Virtual coaching systems can help people with chronic diseases improving their health day by day

FBK is devoted to designing and implementing technology platforms based on artificial intelligence (AI) techniques to make citizens more acquainted managers when it comes to their health and treatment and a more active partner in their interactions with health professionals.

In order to achieve this high goal, we combine several excellence skills and push forward the frontier of knowledge within the related disciplinary fields: from NLP techniques to persuasive technologies, from machine learning to taylored software and app development.

Such digital technologies can help health system preventing or monitoring diseases. In particultar, through virtual coaching systems that can track changes in day by day patients habits. In this way, people with nutrinional diseases can adopt support tool useful to understand how to progress or manage their diseases.

These tools and logiques can be implemented following a flexible scheme; this is important to re-use available technologies and transfer them from a disciplinary domain to another, reducing a lot, to the strictly necessary what has to be re-engineered.

This approach needs an automatic reasoning engine, characterized by the ability to represent inputs in function of pre-determined objectives.

Explainable AI aims at building intelligent systems that are able to provide a clear, and human understandable, justification of their decisions. This holds for both rule-based and data-driven methods. In management of chronic diseases, the users of such systems are patients that follow strict dietary rules to manage such diseases. After receiving the input of the intake food, the system performs reasoning to understand whether the users follow an unhealthy behavior. Successively, the system has to communicate the results in a clear and effective way, that is, the output message has to persuade users to follow the right dietary rules.

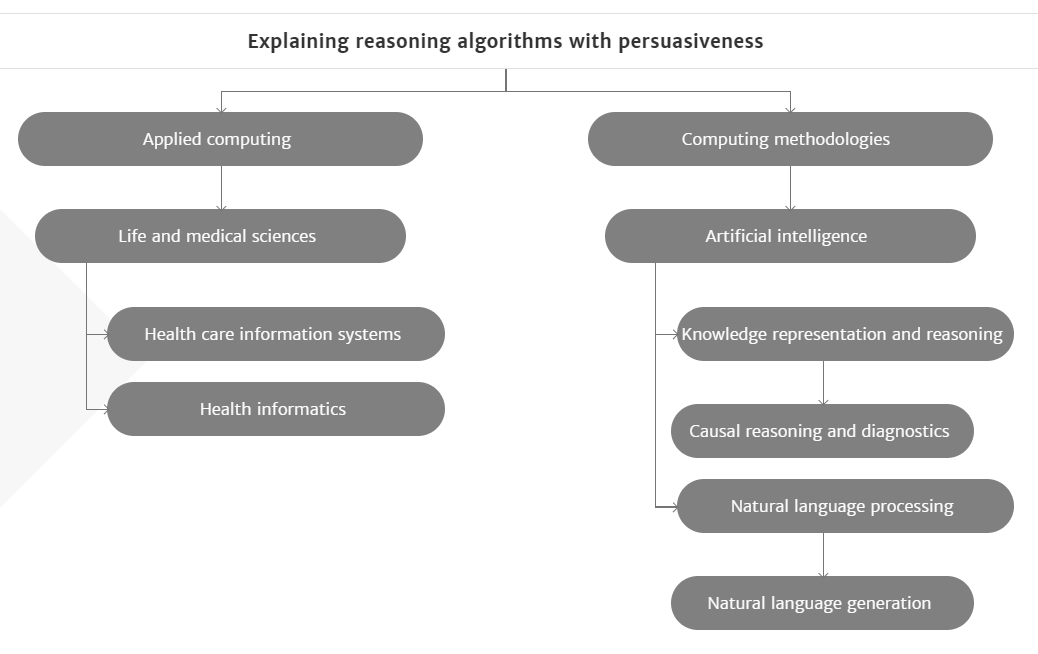

In the research paper entitled “Explainable AI meets persuasiveness: Translating reasoning results into behavioral change advice“, the FBK researchers Mauro Dragoni, Ivan Donadello and Claudio Eccher address the main challenges to build such systems:

- the Natural Language Generation of messages that explain the reasoner inconsistency;

- the effectiveness of such messages at persuading the users.

Results prove that the persuasive explanations are able to reduce the unhealthy users’ behaviors.

Highlights

• An Explainable AI system based on logical reasoning that supports the monitoring of users’ behaviors and persuades them to follow healthy lifestyles.

• The ontology is exploited by a SPARQL-based reasoner for detecting undesired situations within users’ behaviors, i.e., verifying if user’s dietary and activities actions are consistent with the monitoring rules defined by domain experts.

• The core part of the Natural Language Generation component relies on templates (a grammar) that encode the several parts (feedback, arguments and suggestion) of a persuasion message.

• Results compare the persuasive explanations with simple notifications of inconsistencies and show that the former are able to support users in improving their adherence to dietary rules.